|

|||||||||||

| celiagraefedtool.pdf | |

| File Size: | 13465 kb |

| File Type: | |

In addition to reaching underserved schools and communities, the mobile lab expands public engagement at parks and festivals. It’s outfitted with a full set of instructional materials, such as microscopes and equipment for monitoring water quality to create hands-on experiences that build awareness and connections to local waterways.

Read more at

https://stroudcenter.org/press/watershed-on-wheels-makes-a-splash-world-water-day/

| Tammy Piper, Watershed Specialist and an Act 38 Technician with the Franklin County Conservation District in Chambersburg, PA requested Macroinverbrates.org imagery for use in their outdoor signage highlighting stream restoration projects. We're thrilled for NSF supported work to be used in permanent educational signage highlighting the care and stewardship of watershed ecosystems. Conservation districts are unsung heros in environmental education and long term attention on our waterways. |

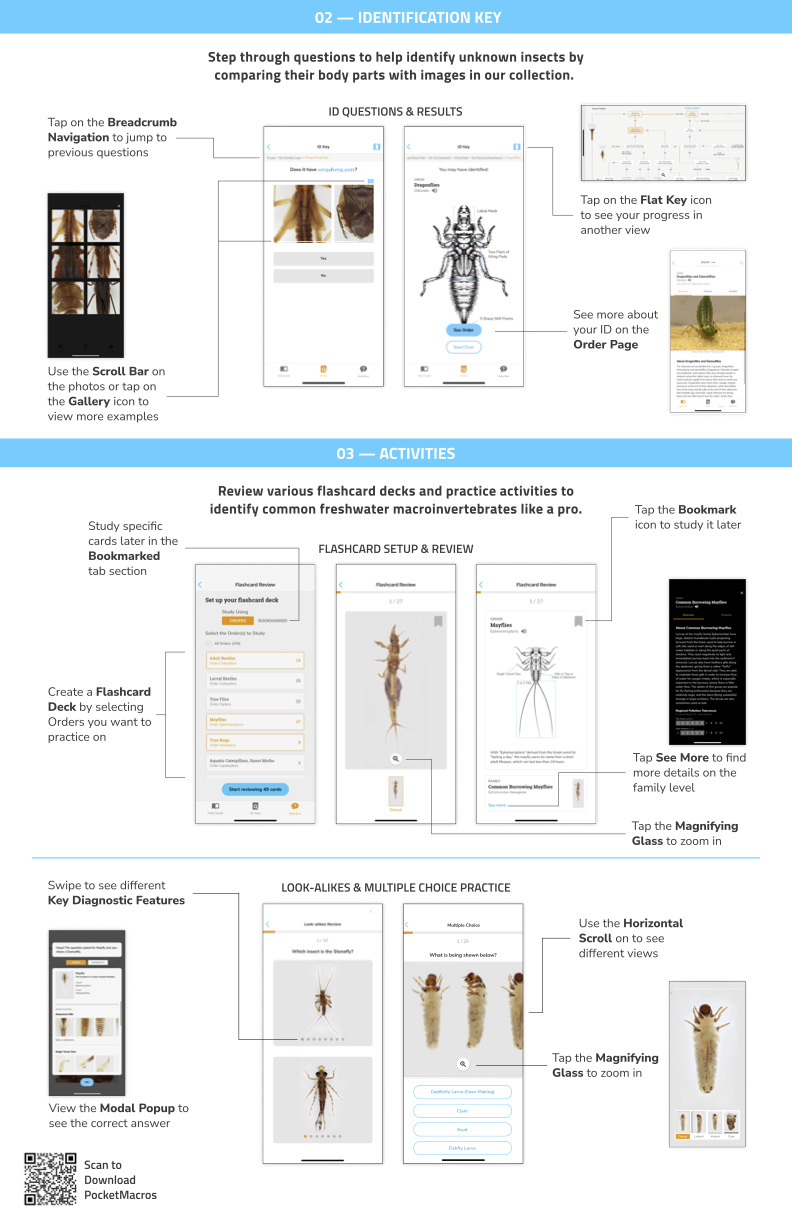

One major artifact I worked on was an online poster for the CitSciVirtual 2021 Conference! With Marti and Camilla, we submitted a poster about Macroinvertebrates.org as “A Digital Tool for Supporting Identification Activities During Water Quality Biomonitoring Trainings.” You can access the poster here.

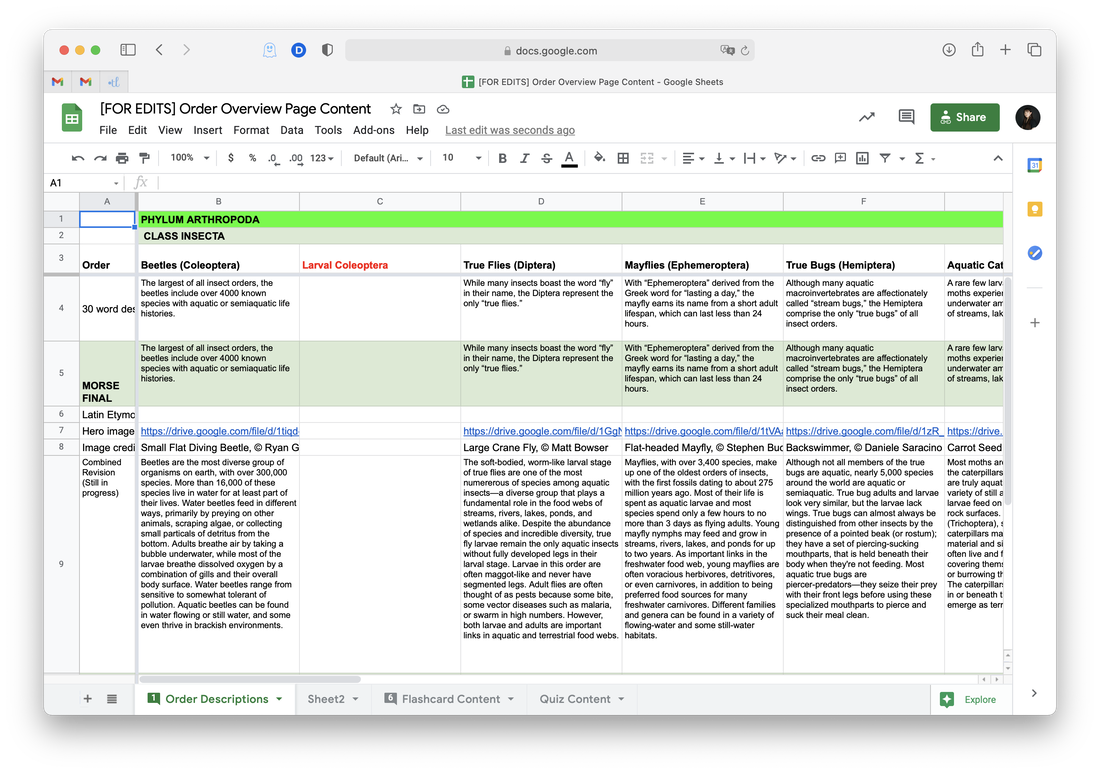

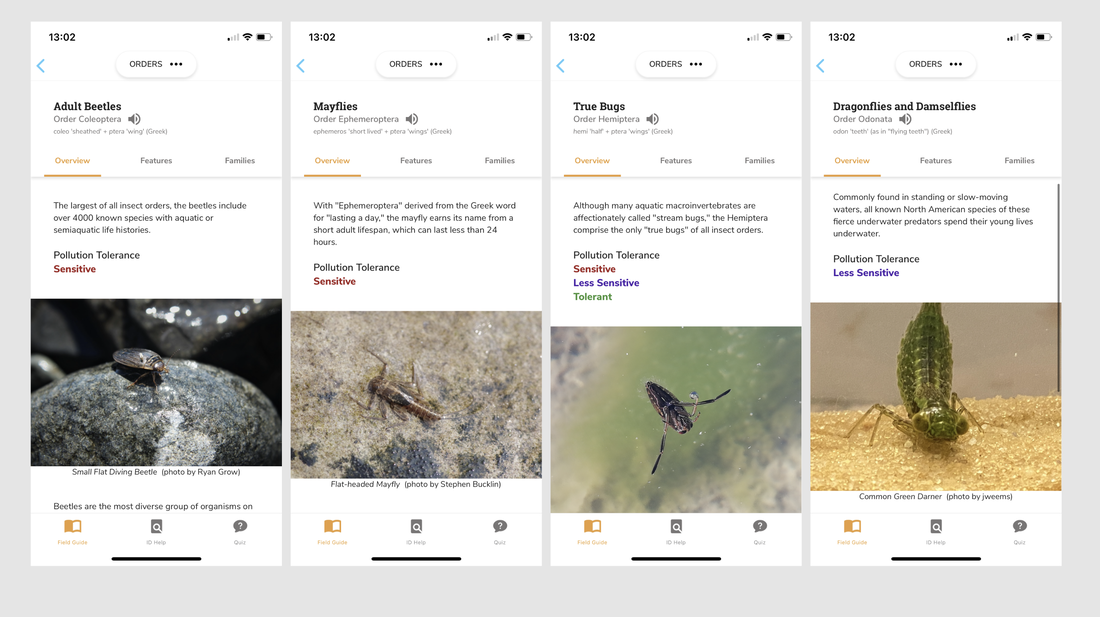

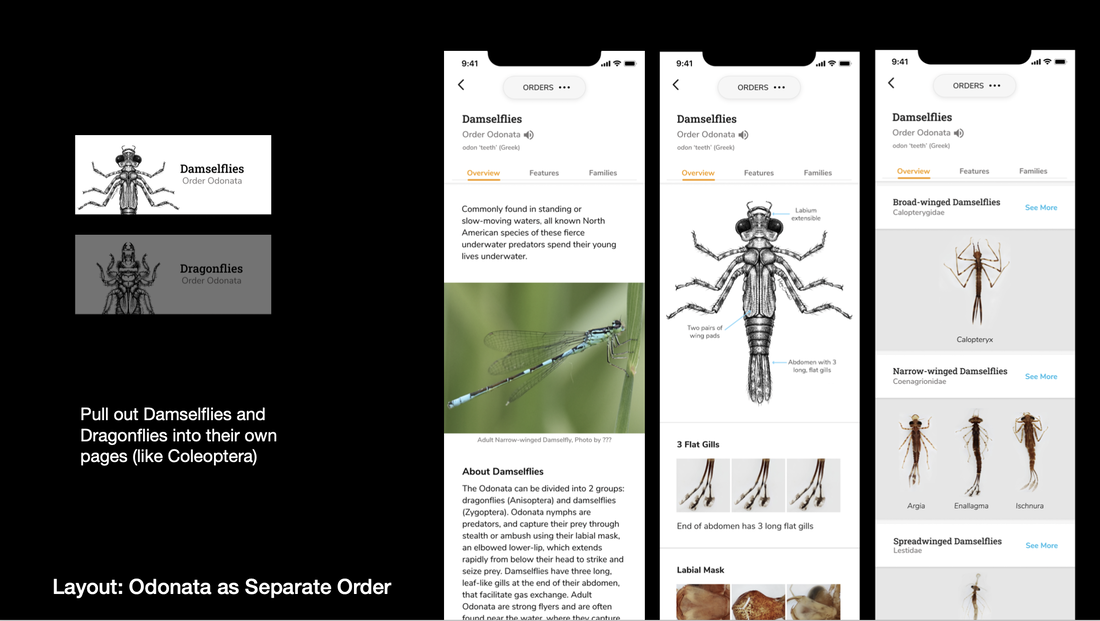

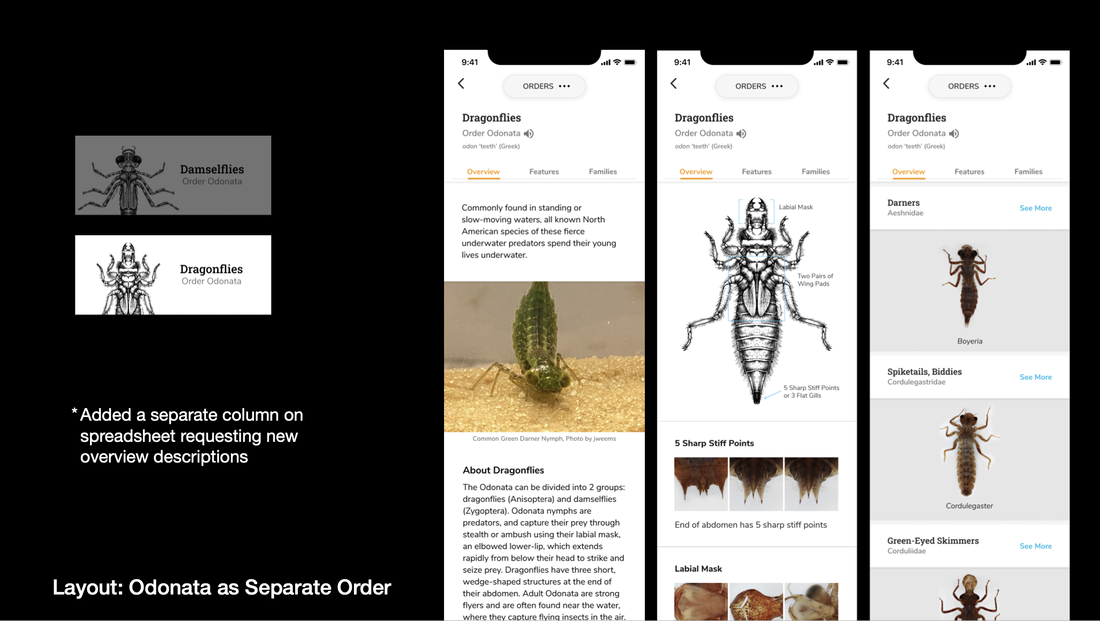

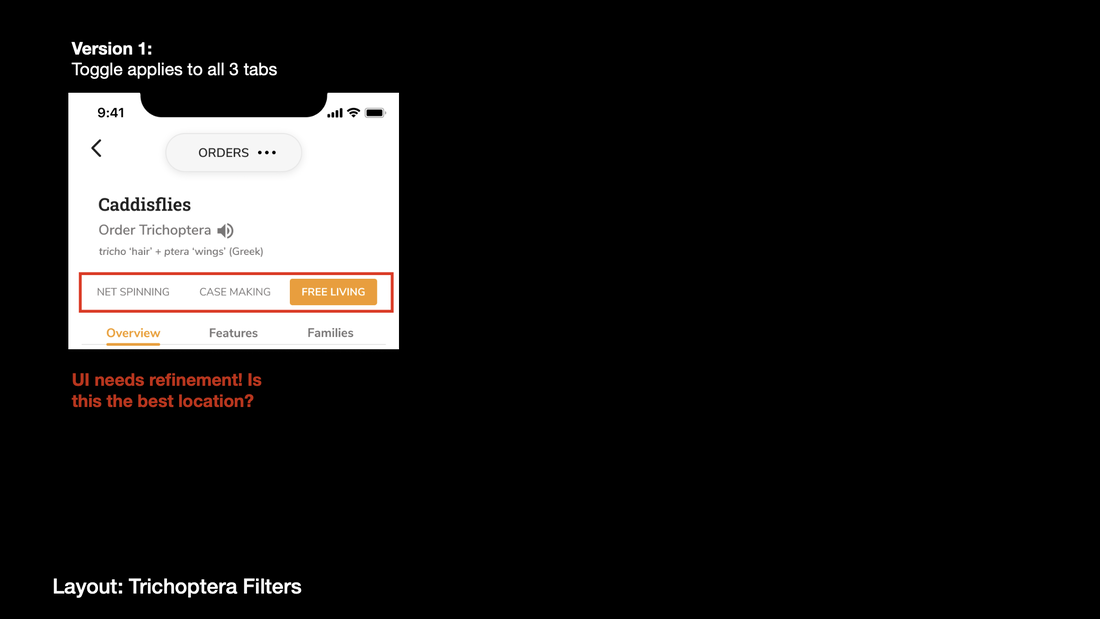

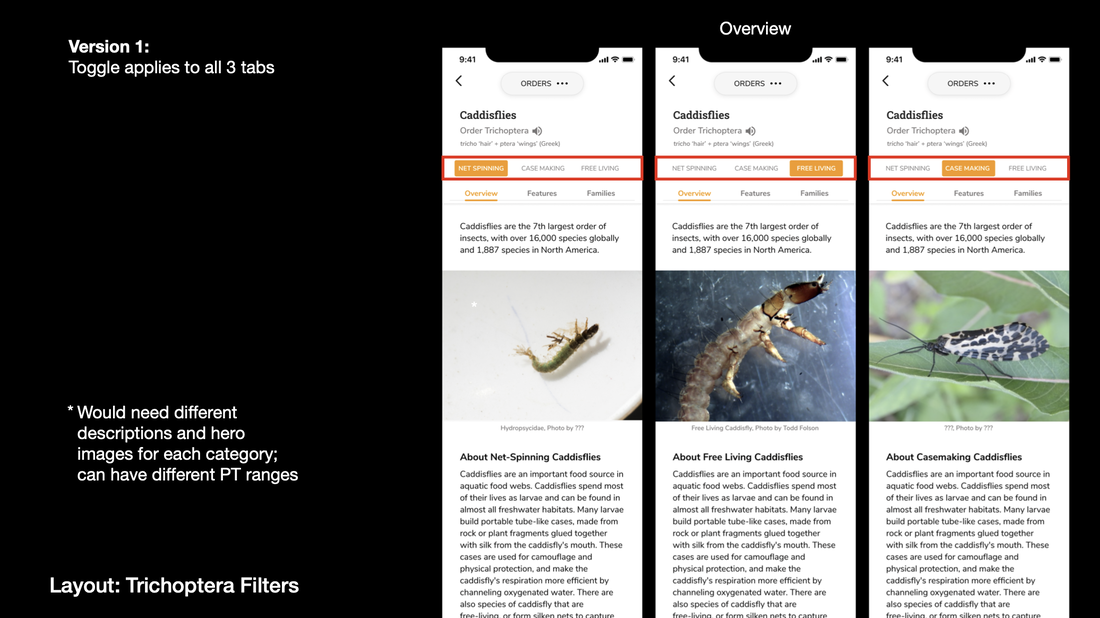

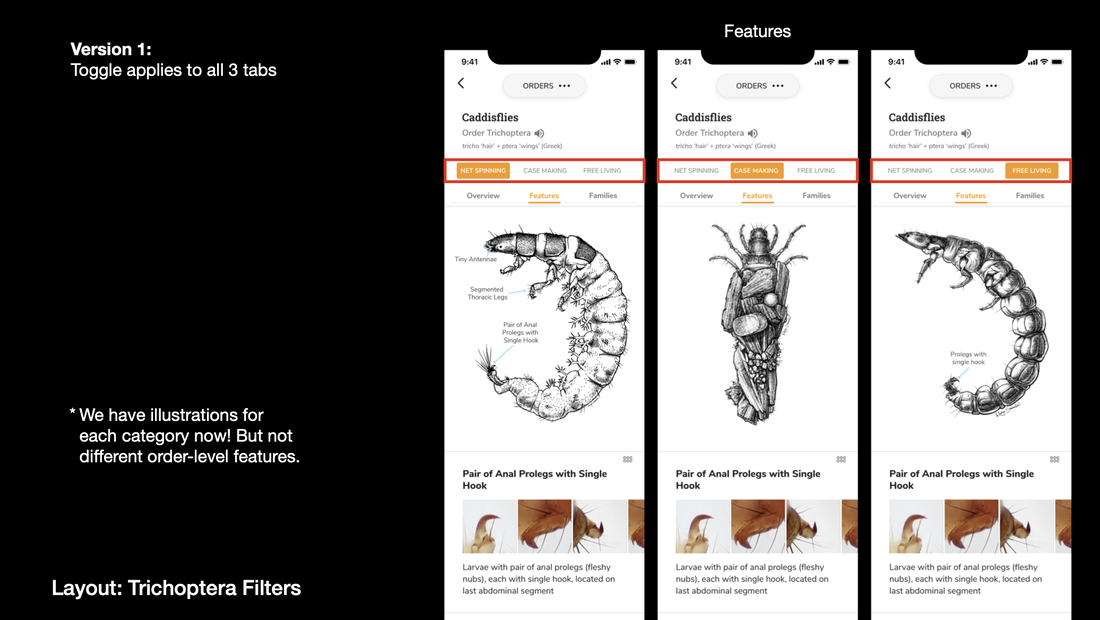

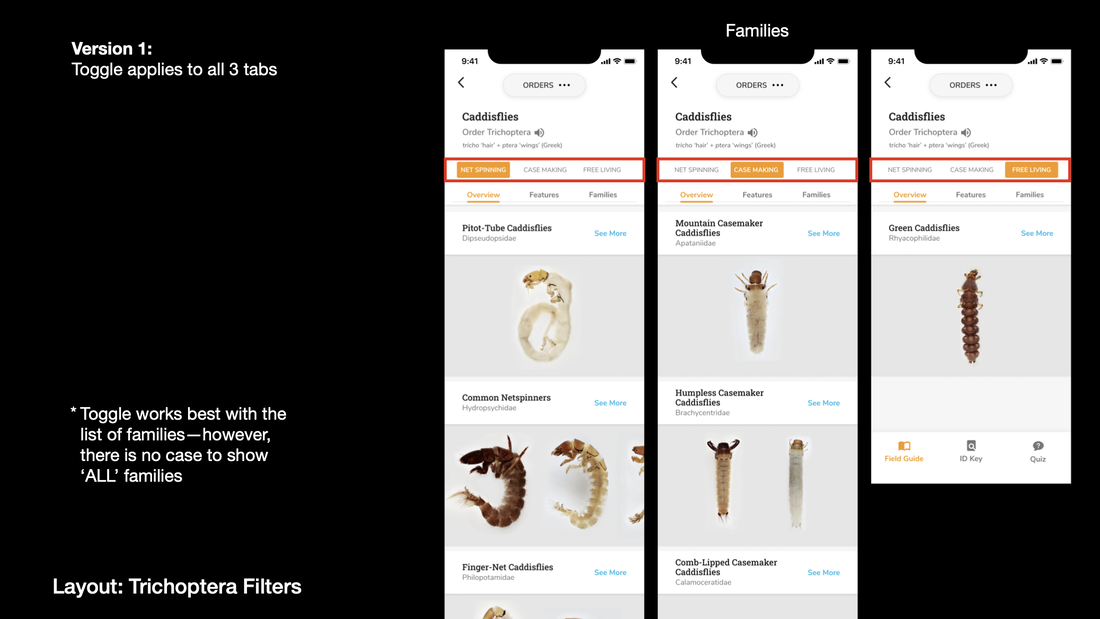

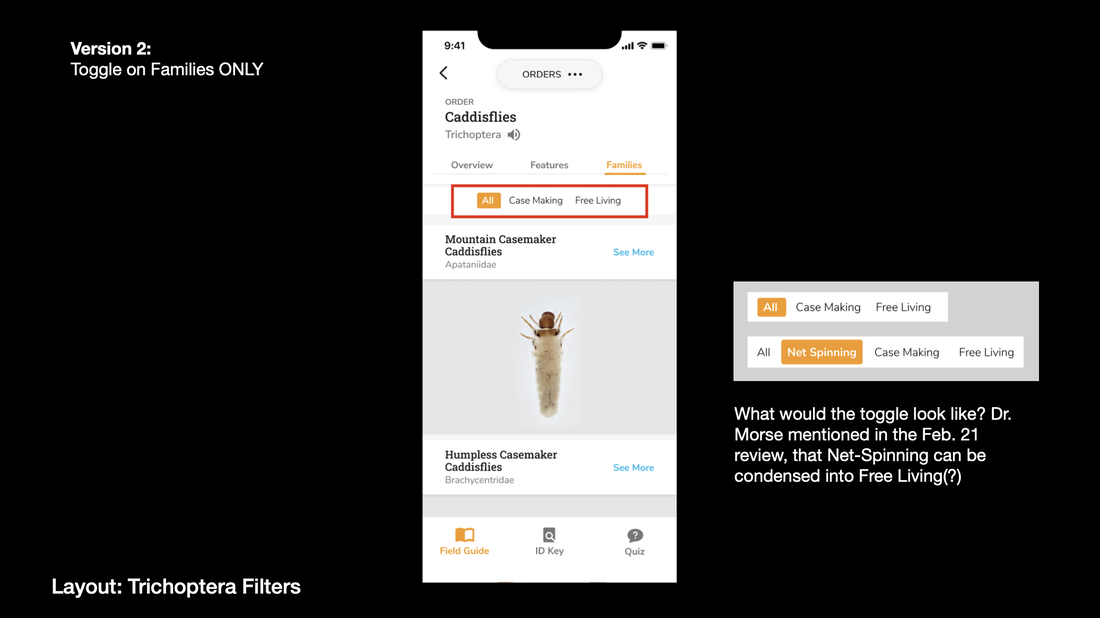

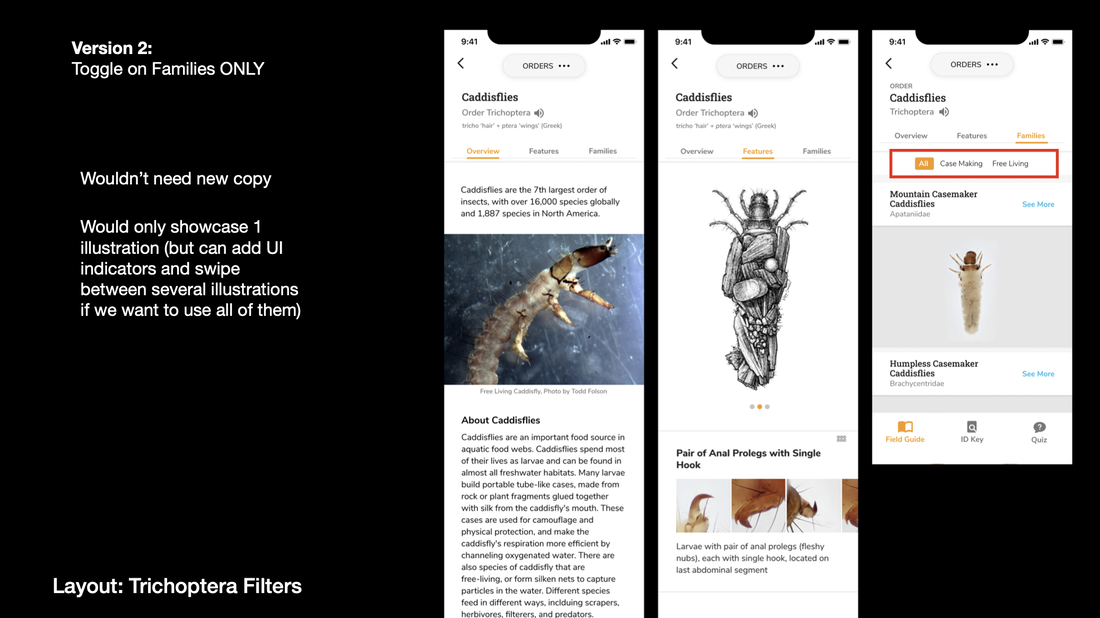

As part of management work for the mobile app, I condensed and revised the descriptions for each insect order, making the content more novice-friendly and less technical. I also dug around iNaturalist for some Creative Commons License images of every insect order, finding hero images for the overview page of each order. I'll be leaving behind my master-list spreadsheet of every specimen's common and scientific names (and more) and hopefully it will be useful for future bug designers as well!

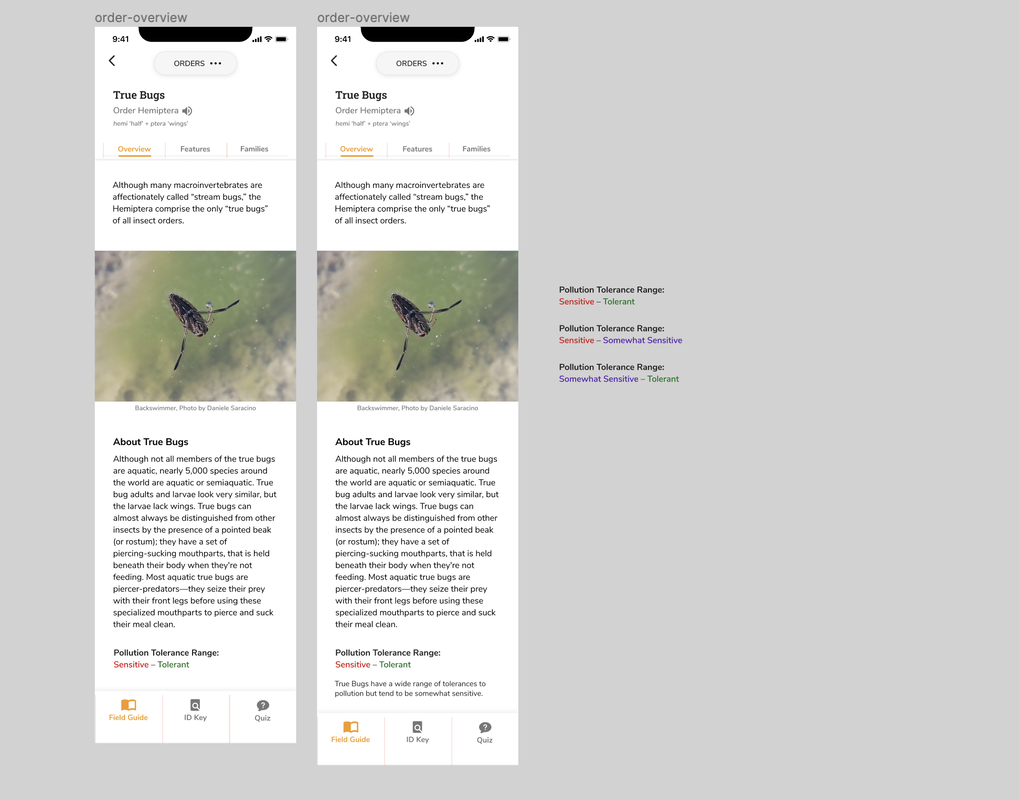

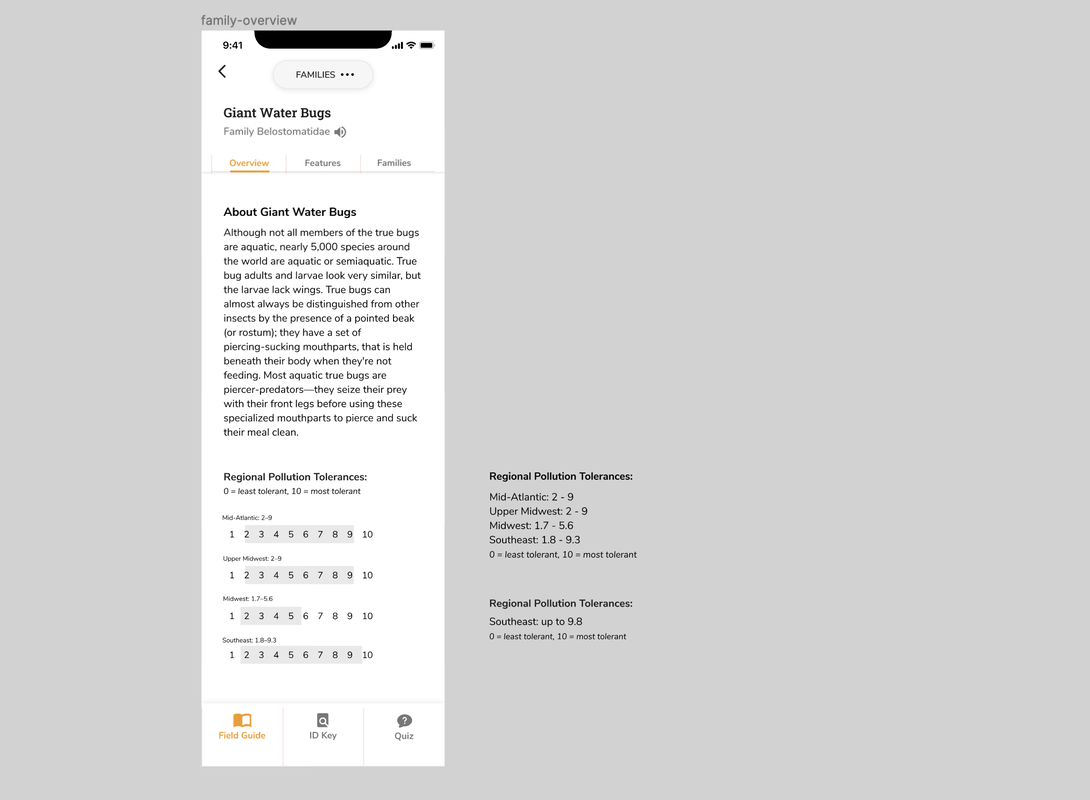

Pollution tolerance has always been a tricky part of the database—how reliable is it at the order level? What's the best language to use: sensitive/insensitive to pollution, or tolerant/intolerant to pollution? It's still not the most resolved design, but I proposed a layout where pollution tolerance sits at the bottom of the overview page—if you read the paragraph description, it leads you into the information about pollution tolerance, providing you more context on perhaps why or how the insect is sensitive or insensitive. (Also, some header tweaking—good headers are super useful in providing context!)

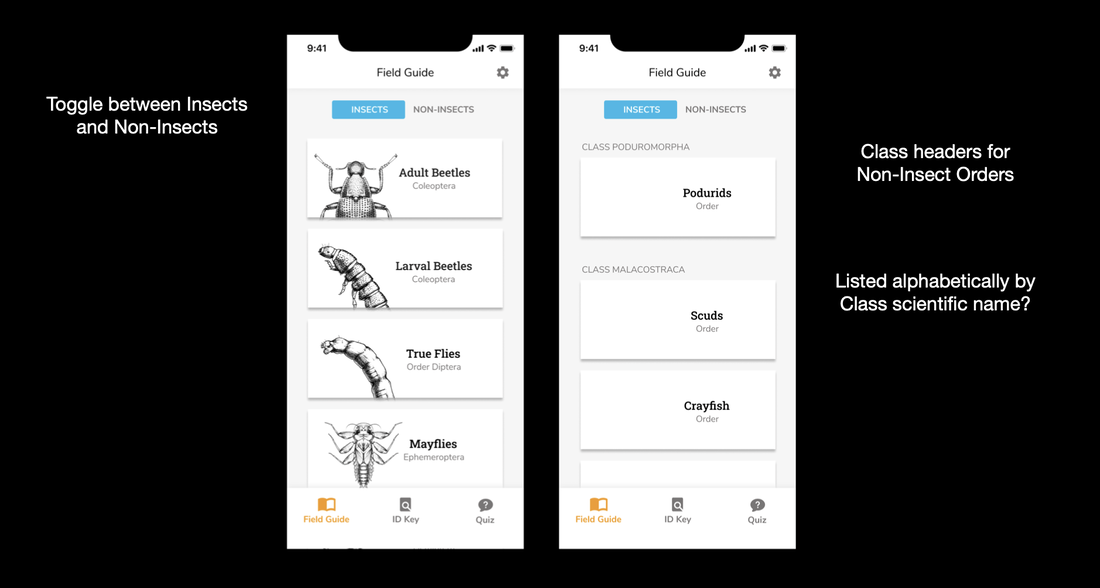

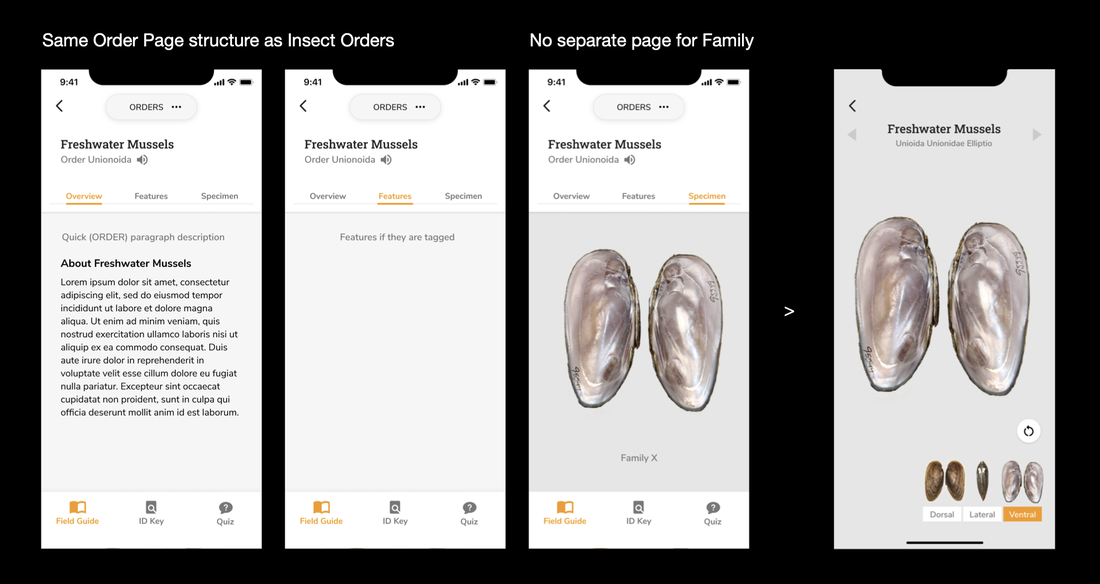

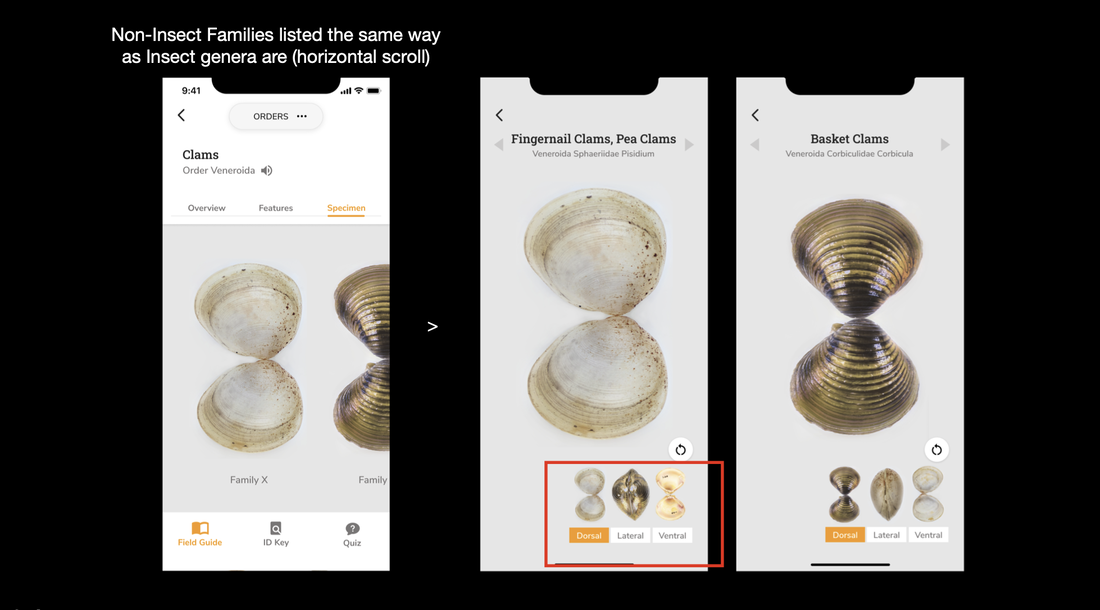

Another major bit of work that I started was trying to figure out how to incorporate the few non-insects, which are used in water monitoring and training, into the structure of the mobile app. The challenge here is that the non-insects aren't grouped as nicely under one Class like the insects are (under Class Insect); instead, they are representatives of several different Classes. Neither the site nor the app currently support Class-level categorizations, and so I had to decide how much of the existing architecture could be shifted to show these separations. In the end, to simplify the content, I removed the nested 'family' page and only showed the non-insect order-level page—the database also has very little content in the first place for non-insects.

Working on Macroinvertebrates.org has been such fulfilling and rewarding work, and I'm honored that I got to contribute so much to an open-sourced educational tool. I've learned a lot these past 3(?) years, and tried a lot of things, and realized I'm a lot better at spreadsheets than I previously knew. This project has been such a formative part of my undergrad experience, which I'll carry with me as I start a fellowship at the New York Times this June. I'll definitely miss Marti and Chris and the rest of the (very small) team!

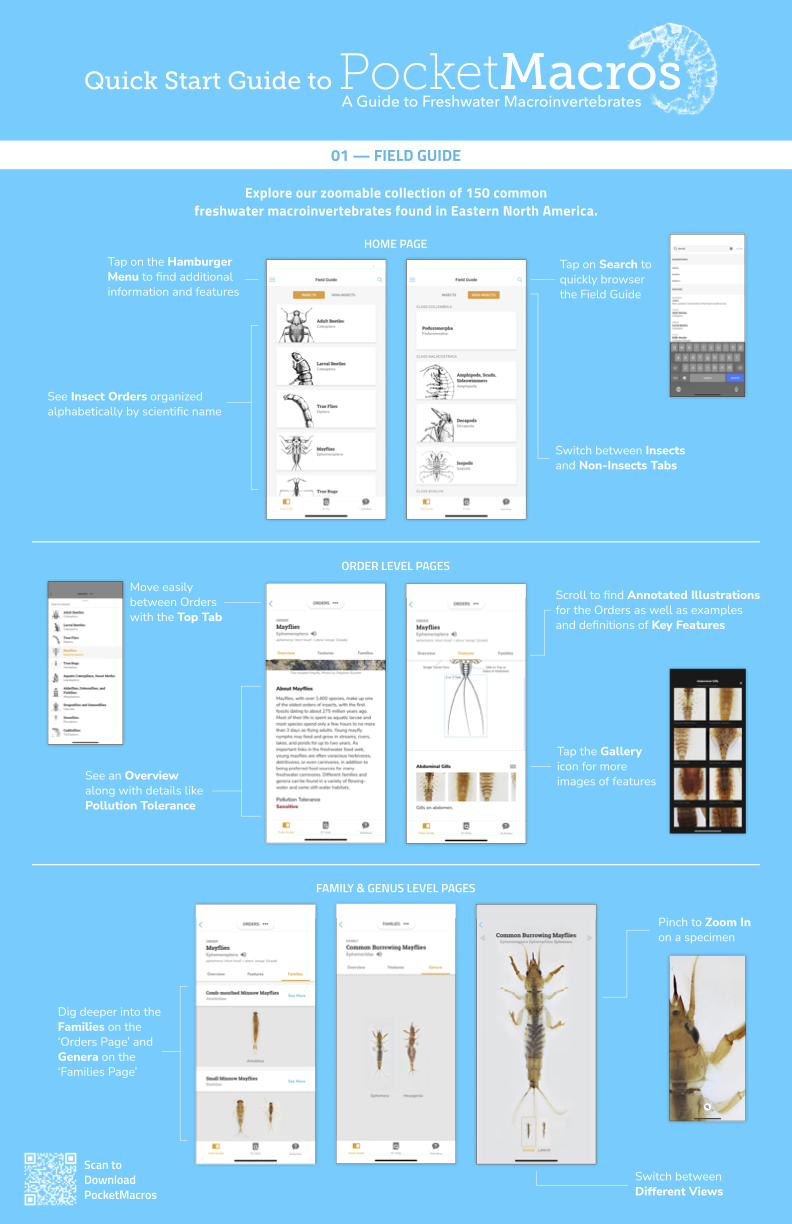

Although I've had other roles this semester, I've been focused on the development of the Quiz section of the app. I had a separate assignment through my independent study to iterate on the quiz prototype. The following details my process:

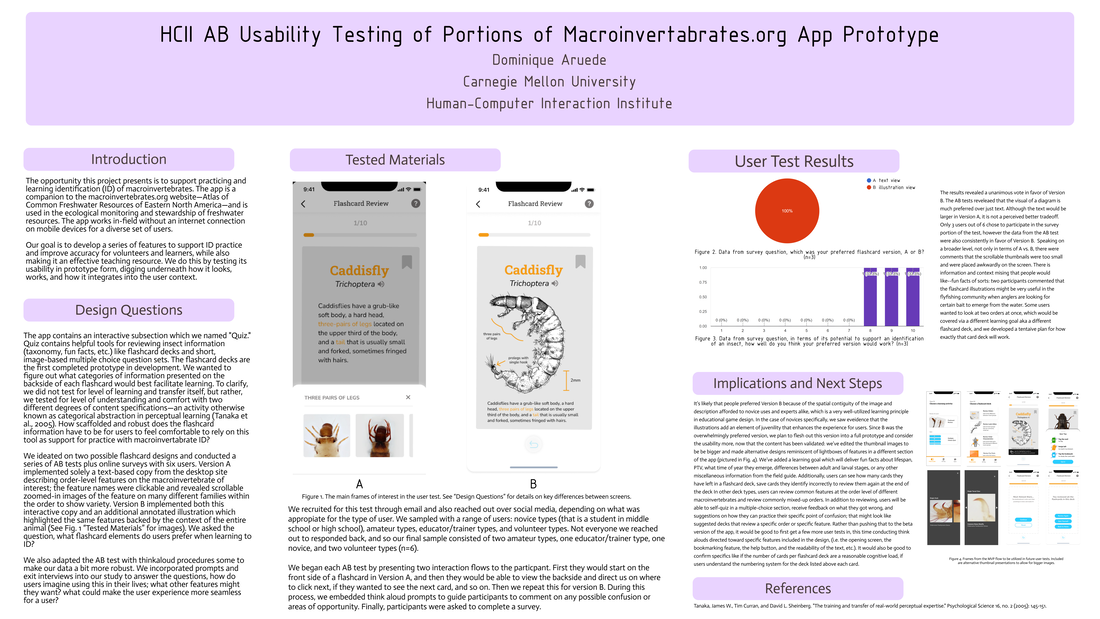

Design Challenge

The app contains an interactive subsection which we named "Quiz." Quiz contains helpful tools for reviewing insect information (taxonomy, fun facts, etc.) like flashcard decks and short, image-based multiple choice question sets. The flashcard decks are the first component of the prototype to reach its user testable version in development. We wanted to figure out what categories of information presented on the backside of each flashcard would best facilitate learning. To clarify, I did not test for level of learning and transfer itself, but rather, I tested for level of understanding and comfort with two different degrees of content specifications. How scaffolded and robust does the flashcard information have to be for users to feel comfortable to rely on this tool (Quiz) as support for practice with macroinvertabrate ID activities?

I ideated on two possible flashcard designs and conducted a series of AB tests plus online surveys with six users. Version A implemented solely a text-based copy from the desktop site describing order-level features on the macroinvertebrate of interest; the feature names were clickable and revealed scrollable zoomed-in images of the feature on many different families within the order to show variety. Version B implemented both this interactive copy and an additional annotated illustration which highlighted the same features backed by the context of the entire animal (the annotations are also clickable, leading to the same formatting of visual examples)—images below. I asked the question, what flashcard elements do users prefer when learning to ID?

Mockup Versions and Final Prototype Iterations Developed

Below are moving examples of both flows users were taken through during testing. They were not shown the full scope of the quiz, only the front and back of the flashcards in Version A and Version B. Version A is on the left and Version B is on the right.

first page of one of the data collection sheets used in this study

first page of one of the data collection sheets used in this study I recruited for this test through email and also reached out over social media, depending on what was appropiate for the type of user. We sampled with a range of users: novice types (that is a student in middle school or high school), amateur types, educator/trainer types, and volunteer/designer types. Not everyone I reached out to responded back, and so my final sample consisted of two amateur types, one educator/trainer type, one novice, and two designer types (n=6).

I began each AB test by presenting two flows to the particpant. First they would start on the front side of a flashcard in Version A, and then they would be able to view the backside and direct me on where to click next, if they wanted to see the next card, and so on. Then we repeat this for version B. During this process, we embedded think aloud prompts to guide participants to comment on any possible confusion or areas of opportunity. Finally, participants were asked to complete a survey.

Findings with Design Implications

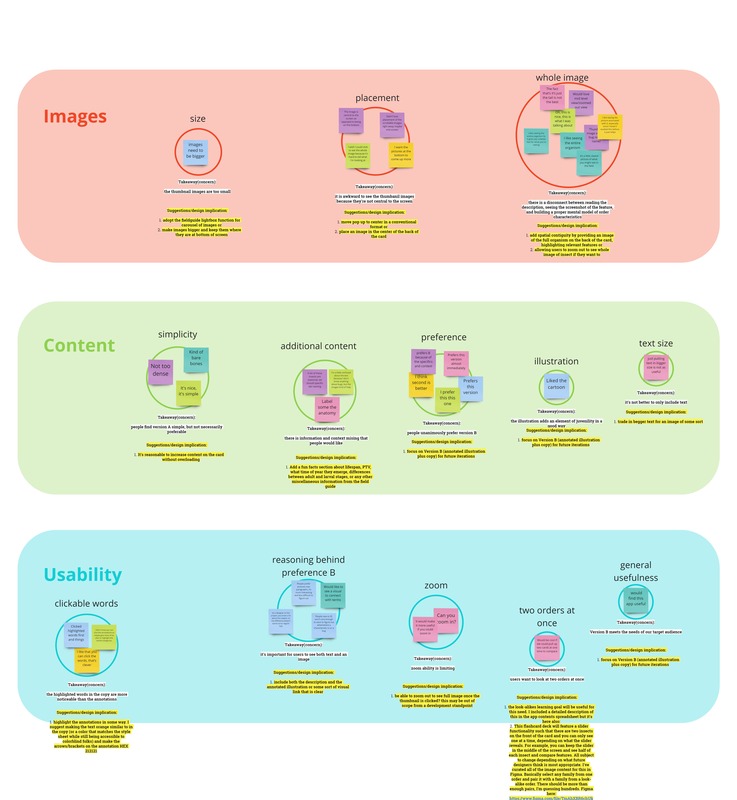

Both of the amateur type participants and the educator/trainer type called in to the interview over Zoom. The novice type called in over FaceTime, and the two designer types participated in person. I had taken notes and during each interview in a pre-made data collection sheet on the comments and reactions of individual participants. There were no transcripts generated for these interviews, but the interviews conducted over Zoom were all visually and auditorily recorded. I then went back and consulted the videos again to fill in information I missed while I was conducting the intwerview. The next step was to transfer every individual data point to a synthesis workspace in Miro and organize first by participant, labeling each data point with design cues that are good identifiers for sorting later (i.e. "suggestion," "preference," "Version A," "Version B," etc.). Finally, I built an affinity diagram, grouping by concern, summarizing the painpoint/comment, and developing suggestions or design implications for future designers on this project to refer to. I identified three main concern areas. Below are the findings:

| 1. Images Size

2. Content Simplicity

| |

- Takeaway

- there is information and context mising that people would like

- Implication

- add a fun facts section about lifespan, PTV, what time of year they emerge, differences between adult and larval stages, or any other miscellaneous information from the field guide

- Takeaway

- people unanimously prefer version B

- Implication

- focus on Version B (annotated illustration plus copy) for future iterations

- Takeaway

- the illustration adds an element of juvenility in a good way

- Implication

- focus on Version B (annotated illustration plus copy) for future iterations

- Takeaway

- it's not better to only include text

- Implication

- trade in begger text for an image of some sort

3. Usability

Clickable Words

- Takeaway

- the highlighted words in the copy are more noticeable than the annotations

- Implication

- highlight the annotations in some way. I suggest making the text orange similar to in the copy (or a color that matches the style sheet while still being accessible to colorblind folks) and make the arrows/brackets on the annotation HEX 212121

- Takeaway

- Users value spatial contiguity, in other words, it's important for users to see both text and an image near each other

- Implication

- include both the description and the annotated illustration or some sort of visual link that is clear

- Takeaway

- the lack of zoom ability is limiting

- Implication

- be able to zoom out to see full image once the thumbnail is clicked? this may be out of scope from a development standpoint

- Takeaway

- users want to look at two orders at once

- Implication

- the look-alikes learning goal will be useful for this need. I included a detailed description of this in the app contents spreadsheet but it's here also:

This flashcard deck will feature a slider functionality such that there are two insects on the front of the card and you can only see one at a time, depending on what the slider reveals. For example, you can keep the slider in the middle of the screen and see half of each insect and compare features. All subject to change depending on what future designers think is most appropriate. I've curated all of the image content for this in Figma. Basically select any family from one order and pair it with a family from a look-alike order. There should be more than enough pairs, I'm guessing hundreds. Figma here.

- the look-alikes learning goal will be useful for this need. I included a detailed description of this in the app contents spreadsheet but it's here also:

- Takeaway

- Version B meets the needs of our target audience

- Implication

- focus on Version B (annotated illustration plus copy) for future iterations

Taking all of this into account, I developed an interactive prototype in Figma, incorporating the bare minimum edits that were suggested through the synthesis (aka, fleshing out Version B with the proper annotations for the illustrations, and fixing some some small UI thing slike the help button).

I also prototyped an alternative way to display the images, using a bigger aspect ratio and drawing from the design of the lightbox feature in the Field Guide, a suggestion first proposed by my colleage, Estelle Jiang. I also prototyped the possible flow that's created when the bookmark button is pressed on a card: a new deck with only the bookmarked cards will be presented upon completion of the whole deck for the user to review once more or as many times as they like. Once the user indicates that they've mastered them, they will see an end screen with options. One note is that this version of flashcards contains a 'back' button near the bottom of each flashcard, but that is strictly for prototyping purposes because Figma doesn't recognize the difference between left and right swipes, it just registers a general swipe. In the real beta, left swipe means 'Card Mastered' and right swipe means 'Undo Last Swipe' or 'Go Back,' and of course, bookmark button means 'Study Again.' So there will be no need for a physical back button. Below is the exemplary demo of all that I've described.

Helpful Links

- Missing Quiz Content Spreadsheet

- Common Mistakes (renamed to 'review look-alikes') learning content

- AB Testing Documentation and Raw Data (i.e protocol, survey, notes, recordings, etc.)

- AB Testing Synthesis

Authors: Dominique Aruede (Carnegie Mellon University), Marti Louw (Carnegie Mellon University)

Abstract:

The opportunity this project presents is to support practicing and learning identification (ID) of macroinvertebrates. The app is a companion to the macroinvertebrates.org website and works in-field without an internet connection on mobile devices for a diverse set of users. The app has an interactive subsection of the prototype which we named "Quiz." Quiz contains helpful tools for reviewing insect information (taxonomy, fun facts, etc.) like flashcard decks and short, image-based multiple choice question sets. We ideated on two possible flashcard designs and conducted a series of AB tests plus online surveys with six users. We ask the question, what flashcard elements do users prefer when learning to ID? We discovered that people unanimously preferred the version that coupled an illustration with the description of order-level characteristics of each insect, as opposed to the version that only had a textual description with closeup views of characteristics.

Meeting of the Minds Live Symposium Poster Link

Macroinvertebrates.org: A Digital Tool for Supporting Identification Activities During Water Quality Biomonitoring Trainings

Authors: Alice Fang (Carnegie Mellon University), Marti Louw (Carnegie Mellon University), Camellia Sanford-Dolly (Rockman et al), Andrea Kautz (Carnegie Museum of Natural History), Dr. John Morse (Clemson University)

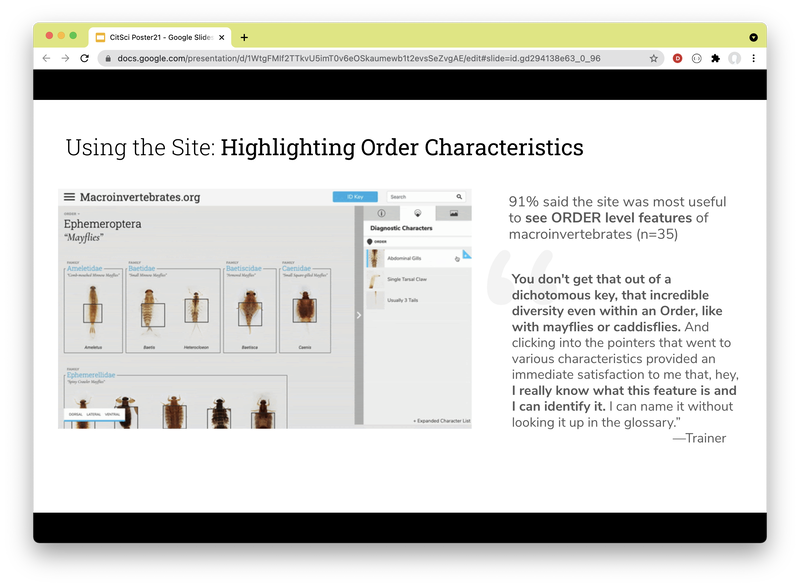

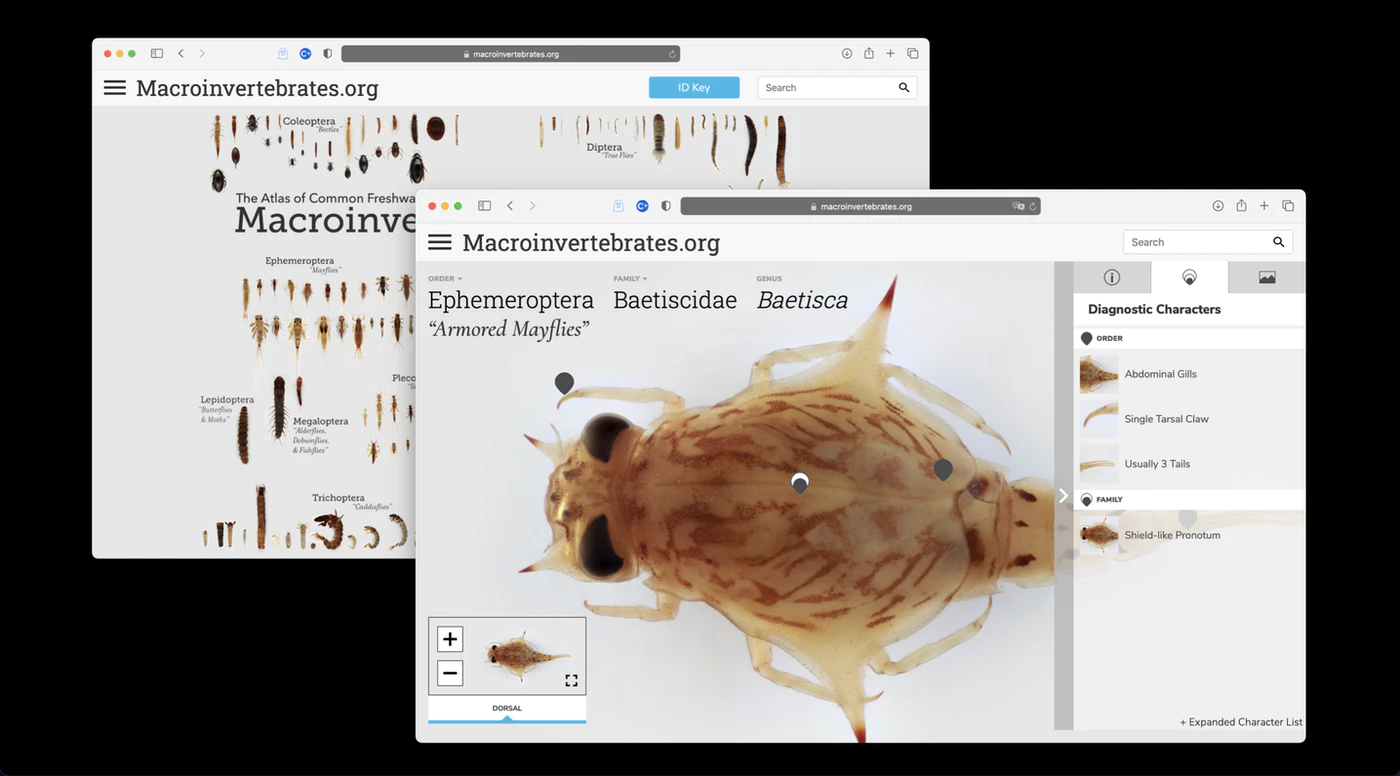

Abstract: Macroinvertebrates.org is a visual teaching and learning resource to support identification activities in water quality biomonitoring. The online tool was developed with diverse stakeholders (including trainers, professional scientists, educators, and volunteers) through a codesign process. In this poster, we report on design research and evaluation data collected from trainers that characterizes how they integrated the site into their training workshops, and features they found useful.

Survey, interview, and observation data revealed that Macroinvertebrates.org provided a compelling visual reference tool for trainers, allowing users to easily zoom in on key diagnostic characteristics needed for identification. Trainers reported that the site made it easier for them to train volunteers to Order and Family, and for volunteers to see relevant features and increase ID accuracy. Specifically, the site extended the utility of trainings by serving as a resource for volunteers to practice and review the ID process before and after trainings. In this poster, we report on the perspectives of trainers, gathered through the design research and formative evaluation process.

https://connect.citizenscience.org/posts/macroinvertebratesorg-a-digital-tool-for-supporting-identification-activities-during-water-quality-biomonitoring-trainings

Using Log Visualization to Interpret Online Interactions During Self-Quizzing Practice for Taxonomic Identification by Chelsea Cui, Jordan Hill, Marti Louw, Jessica Roberts.

We were excited to present CMU alumna, and former REU student Chelsea Cui’s study at the 2021 annual meeting of the American Educational Researchers Association (AERA). Chelsea analyzed log data from participants in a 10-day study in which they used our quiz feature to practice aquatic macroinvertebrate ID. We analyzed interactions with the quiz tool along with accuracy in pre- and post-tests to determine how the quizzing platform was being utilized by learners at different experience levels. Through the custom visualization platform we were able to glean insights on how to improve our quizzing platform for future use.

See the poster here: https://aera21-aera.ipostersessions.com/Default.aspx?s=D0-9D-A0-1B-C0-49-87-5B-8A-3F-56-A7-02-C5-ED-66#

Project Team

An interdisciplinary team

of entomologists, learning scientists, software engineers and designers collaborating to improve macroinvertebrate identification training and technologies with volunteer biomonitoring organizations.

Categories

All

Broader Impacts

By Clemson

By CMU

By Educators

By Powdermill

By Stroud

Design Studies

Educator Innovations

Entomology

Imaging

Learning Research

Mobile App

NSF REU

User Research

Archives

June 2023

September 2022

April 2022

August 2021

May 2021

April 2021

January 2021

December 2020

September 2020

August 2020

July 2020

May 2020

November 2019

August 2019

July 2019

June 2019

May 2019

April 2019

March 2019

February 2019

January 2019

October 2018

September 2018

August 2018

June 2018

May 2018

March 2018

December 2017

August 2017

June 2017

May 2017

April 2017

March 2017

December 2016

November 2016

October 2016

September 2016

August 2016

July 2016

June 2016

May 2016

April 2016

March 2016

RSS Feed

RSS Feed